Supercomputing power now delivered from the cloud

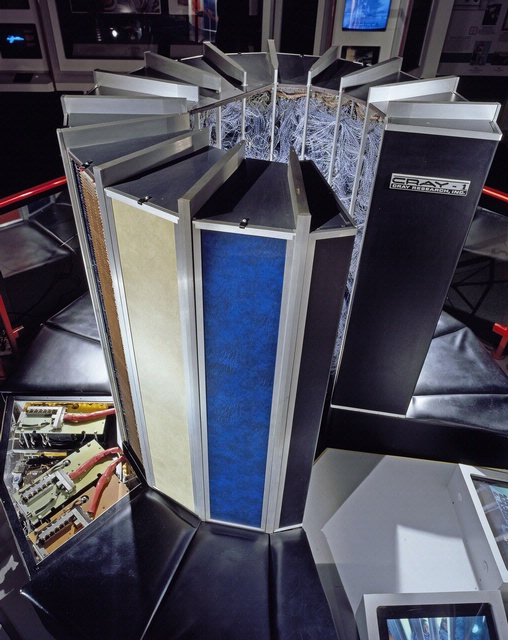

If you visit the Smithsonian Institute in Washington, DC, you can view one of the original Cray supercomputers, a gargantuan array of boxes, about seven feet high, that cost between $6-$8 million when sold and installed in the 1970s. Only government agencies, big corporations and big universities could have such power.

Now, with the cloud upon us, so is supercomputing power -- rentable by the hour. That means that anyone doing research or trying to solve problems that require massive processing power be brought to bear can tap into a supercomputer in the cloud for as long as needed.

The New York Times' Steve Lohr reports that at an Amazon Web Services conference held in New York this week, Cycle Computing, which offers such a service, demonstrated a massive, 50,000-processor supercomputing cluster, built on Amazon Web Services, that conducts drug compound simulations. "That software bundle performed a daunting simulation of 21 million chemical compounds to see if they would bind with a protein target. That run ate up the equivalent of 12.5 processor years, but it was completed in less than three hours. The computing cost was less than $4,900 a hour."

$5,000 an hour is a bargain for small jobs. For its part, Cray, one of the original supercomputer makers, now offers low-end models at $200,000, so cloud supercomputer jobs that accumulate more than 40 hours over a given stretch of time may start to lose their advantage over on-premises systems.

Furthermore, a federal government report issued at the end of last year questions whether cloud is the best option for research applications. Cloud computing may provide a good value proposition for many front-line and transactional applications, but the costs and performance issues in applying cloud to specialized, deep analytical, or scientific environments may still be too prohibitive. The report examined the economics of the U.S. Department of Energy's Magellan project (“a cloud for science”), initiated two years ago to investigate the potential role of cloud computing in addressing the data-intensive computing needs of the DOE’s Office of Science.

Nevertheless, there is clearly momentum toward more cloud delivery of many sophisticated compute jobs. Scientific analysis and simulations may be natural applications for supercomputers, but Cycle CEO Jason Stowe says many organizations could benefit from supercomputing power to pull insights from Big Data. Just about every organization is facing a Big Data deluge, and those that can find patterns and draw actionable insights are ahead of the game.

Cycle Computing says applications supported among its top users include engineering, simulation, rendering, testing, drug discovery, bioinformatics, and finance applications.

(Photo: National Air & Space Museum, Smithsonian Institute.)

This post was originally published on Smartplanet.com